Arc

It is relatively easy to capture your static, everyday life, and I think we can all agree that we have enough pictures of people's food. However, it is rather difficult for us to capture the more exciting aspects of our life: our active life. For example, the times when we are rock climbing, mountain biking, skiing, snowboarding, bungee jumping, skateboarding, motocrossing, parkouring, playing sports or running around with the kids. Currently, the best way for us to capture our active lives is by either having our friends videotape us (thereby restricting them from having fun) or by constructing contraptions such as the GoPro mounted on a skipole in the video to the right. The problem with this solution is two-fold. First, the person has to concentrate on filming instead of on having fun and performing their tricks. Secondly, if you watch the film to the right, the person is stationary with respect to the video camera which causes the landscape to appear to be moving instead of the person. Not only can this be nauseating, but it also drastically minimizes the thrilling nature of the activity. This is where Arc fits in. We want to detach the camera from the body and automatically provide the perfect perspective for capturing these events.To bring this idea to fruition, we have built a gyro stabilized camera mount and attached it to a custom quadrotor. To enable the ability to effortlessly track the user, we have built the arduino based GPS tracker as pictured on the left. (Note this device also serves the dual purpose of a bomb detonator replica :-) This device continuously sends your position to the quadrotor so it can maintain the perfect perspective of your activity. Even better, future products will allow the user to broadcast their position via their smartphone. See the video below for a demo of our exciting capability.

Demo Reel

Topographik

The arrival of cheap 3D sensors, which the Microsoft Kinect is one example of, will enable many new exciting domains that before were unreachable because the cost of acquiring the data was too high. One area that will greatly benefit from this technology is online shopping. Currently, almost all products can be effectively ordered online except for clothing. There are a few exceptions to this such as shoes where Zappos revolutionized the industry by offering free return shipping, easing the mind of the consumer. However, this model doesn't translate to general online clothing. The huge variation in shapes, fits, sizes, etc. makes it difficult for consumers to know if the article of clothing will fit well. This is where the rise of cheap 3D sensors fits in; given their low cost, they can be used to measure the consumer to ensure the proper fit of clothing before ordering, thereby drastically reducing the number of returns.

The arrival of cheap 3D sensors, which the Microsoft Kinect is one example of, will enable many new exciting domains that before were unreachable because the cost of acquiring the data was too high. One area that will greatly benefit from this technology is online shopping. Currently, almost all products can be effectively ordered online except for clothing. There are a few exceptions to this such as shoes where Zappos revolutionized the industry by offering free return shipping, easing the mind of the consumer. However, this model doesn't translate to general online clothing. The huge variation in shapes, fits, sizes, etc. makes it difficult for consumers to know if the article of clothing will fit well. This is where the rise of cheap 3D sensors fits in; given their low cost, they can be used to measure the consumer to ensure the proper fit of clothing before ordering, thereby drastically reducing the number of returns.

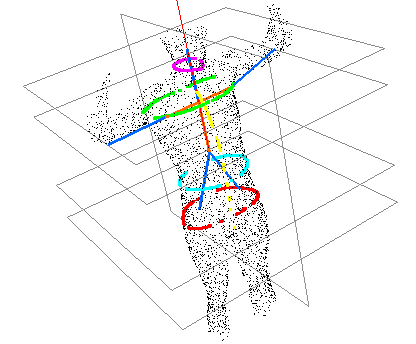

To minimize the pain of manually measuring dozens of dimensions, we have created software to automate this task. Our process has the user stand in front of the Kinect sensor and twirl a complete 360 degrees. As the user spins, we take 3D point cloud snapshots of the body. After the user has completed the twirl, our algorithms process the data and stitch together the individual point clouds to generate a full 3D representation of the user's body. Since each of the 3D images overlaps with the previous image, we register them together to build a dense 3D point cloud representation of their body. From the 3D point cloud, we build a mesh and calculate the dimensions at the appropriate locations for the clothing. The image on the right shows a sample output of our algorithm.